Where we stand

In the hype of A.I., we are observing a world where States are increasingly adopting algorithmic decision-making systems as a magic wand that promisses to “solve” social, economic, environmental, and political problems, spending public resources in questionable ways, sharing sensitive citizen data with private companies and, ultimately, dismissing any attempt of a collective, democratic and transparent response to core societal challenges. To increase critical thinking against such a trend, this project seeks to contribute to the development of a feminist toolkit to question algorithmic decisions making systems that are being deployed by the public sector.

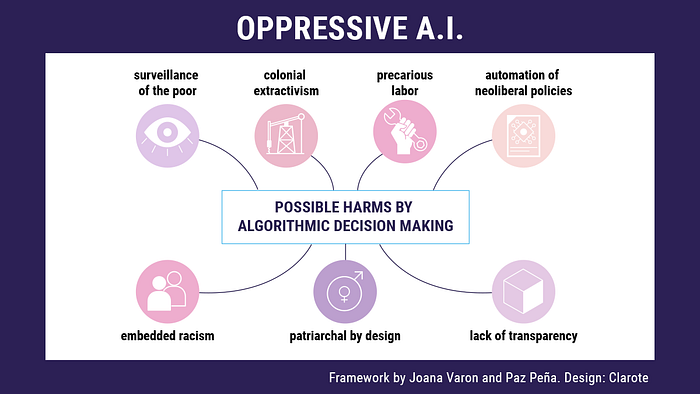

We don’t believe in a fair, ethical and/or inclusive IA if automated decision systems don’t acknowledge structural inequalities and injustices that affect people whose lives are targeted to be managed by these systems. Transparency is not enough if power imbalances are not taken into account. Furthermore, we’re critical of the idea of AI systems being conceived to manage the poor or any marginalized communities. These systems tend to be designed by privileged demographics, against the free will and without the opinion or participation from scratch of those who are likely to be targeted or “helped,” resulting in automated oppression and discrimination from the Digital Welfare States that use Math as an excuse to skip any political responsibility.

Going beyond the liberal approach of Human Rights, which ignores power relations, feminist theories, and practices, builds political structures for us to imagine other worlds based on solidarity, equity, and social-environmental justice. Therefore, we believe in supporting feminist movements to understand the development of these emerging technologies to be able to fight against this automatized social injustice, together, in solidarity.

Note on methodology

This project seeks to bring the feminist movements closer to the social and political problems that many algorithmic decisions carry with them. To that end, we pose three research questions:

- What are the leading causes of governments implementing artificial intelligence and other methods of algorithmic decision-making processes in Latin America to address issues of public services?

- What are the critical implications of such technologies in the enforcement of gender equality, cultural diversity, sexual and reproductive rights?

- How can we adapt feminist theories to provide advocacy guidelines to balance the power dynamic enforced by the usages of A.I and another algorithmic decision making?

To do so, we worked on an extensive literature review, held workshops, and selected case studies where these systems were used to manage poverty or other social security programs. Therefore, we left aside from our in-deep analysis the mapped trend of adopting A.I. for predictive policing and focused on programs that showcase manifestations of a Digital Welfare State. For these, we worked with public information, where we mainly focused on interrogating the discourses owned by the creators of these technologies and that permeate the public opinion and decision-makers. We firmly believe that this allows us to reveal strategies and political categories that can bring these issues closer to the broader feminist agenda and advance a feminist investigation of digital technologies.

Questions and suggestions? Please, reach us at contact@notmy.ai.

Coding Rights

Hackeando o patriarcado desde 2015